AI Call Summaries Increase Call Center Productivity

I validated and shipped an end-to-end call summariztion service that increased a fintech’s call center productivity by over 20% while increasing the quality of client service.

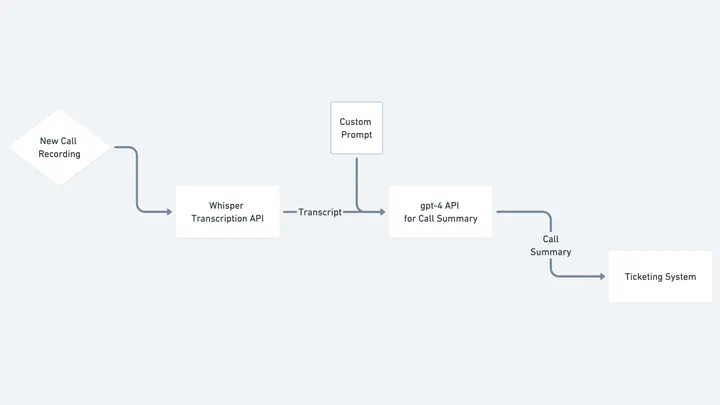

Example data pipeline for call summarization

Example data pipeline for call summarizationOld patterns of software development—building layers of business logic into code—don’t apply in the same way to production-grade AI services. That’s because there’s very little innovation in the pipelines used to connect various LLM-enabled APIs to product data. Instead, what’s novel are the input data provided to those pipelines and how prompts that leverage that data with the LLMs to generate useful outcomes.

For Fintech, this new pattern of product development means giving up the ability to specify and statically check business logic. Therefore, new patterns for testing for and ensuring regulatory compliance must also be developed in order to ship product with minimal risk.

As the company’s first AI-driven use case, this product is a case study in both the enormous potential of AI-driven automation to drive productivity gains as well as proof of the unique opportunity Fintechs have to leverage existing compliance processes to ensure reliable and accurate outputs. I utilized the call center’s existing quality assurance processes to verify and monitor the service’s high quality results while also delivering increased productivity. I also learned firsthand what it’s like to do prompt engineering at scale.

Business Case

The call summarization service was built to increase productivity in a Fintech call center where service agents, due to regulatory requirements, were required to record details about the subject and contact following each call. Often, writing these records required 25% of the average call time for each call, and the notes were riddled with shorthand that was often difficult or time consuming to decipher.

The call summarization service was able to—nearly in real-time—render more complete, accurate, and human-readable records of the calls, vastly exceeding the regulatory requirements and enabling agents to offer higher quality service in follow-up conversations with clients. As a result, agents were able to take more calls and remain more engaged with clients during the call itself. Follow up interactions, too, were more efficient because agents were able to more quickly understand where we left off with a client.

Because of the high productivity margin, the small number of API calls required (just one each for whisper and Chat Completion), and the economical cost of input and output tokens for the calls, the service immediately began contributing to call center unit economics.

Technical

The basic pattern for implementing AI services is to construct a pipeline or cascade of operations, often API calls with bespoke processing in between. In this case, the system collected the call recording as an input to a pipeline of API calls to transcribe the call with OpenAI’s Whisper and then summarize that transcript with OpenAI’s chat completion API with gpt-4. The resulting summary was then presented in the call center’s ticketing system.

Provided that this pipeline could be be tweaked with an arbitrary prompt for the transcription and summarization, the engineering work is complete, and the task of prompt engineering begins. Optimizing the prompt required answering the question, “How can we prompt GPT to produce an accurate and sufficiently detailed summary in a consistent format?” By building custom tooling in Retool, I was able to test many iterations of the prompt over a large corpus of existing call recordings and agent notes. The “winning” prompt produced summaries with substantially more detail than the existing agent notes. These summaries were so consistent and natural, even the most inexperienced agent could quickly scan to understand the purpose and resolution of an interaction with the call center.

Regulatory

I believe regulated Fintech operations are ideal for AI transformation because there is typically an existing and mature internal process for ensuring operational compliance. These processes can be leveraged, with modifications, to test, validate, and monitor the accuracy of these production services. This is in contrast to non-regulated and especially consumer-facing AI products that often struggle to define “good” or “accurate” and as a result, often find themselves in the headlines when users trigger an unexpected behavior.

In our case, the market standard is that call Center QA teams review a sample of calls and associated records for compliance and quality of service, providing scores and feedback to service teams. As new AI services are in development, feedback from these teams on the system’s output help to ensure a successful, safe, and compliant launch. After deployment, these same processes can ensure that the system consistently provides a high degree of accuracy, even with model and prompt changes over time.